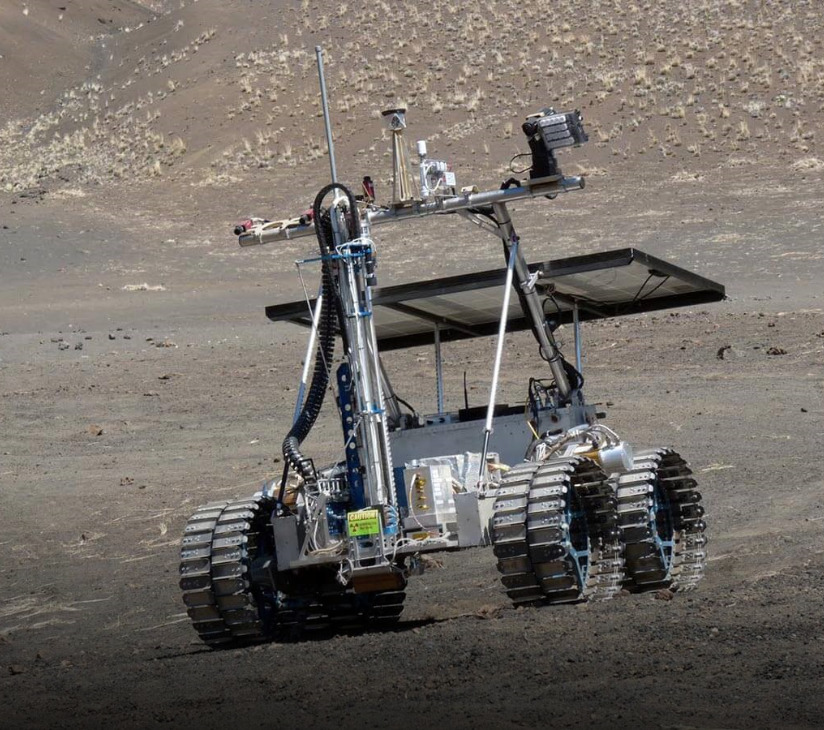

NGC’s Vision-Based Navigation system for rovers has been deployed onboard the Artemis Jr Moon exploration prototype rover. It also successfully supported the field deployment campaign for the NASA RESOLVE experiment , whose primary goal is to characterize water and other volatiles in the lunar regolith.

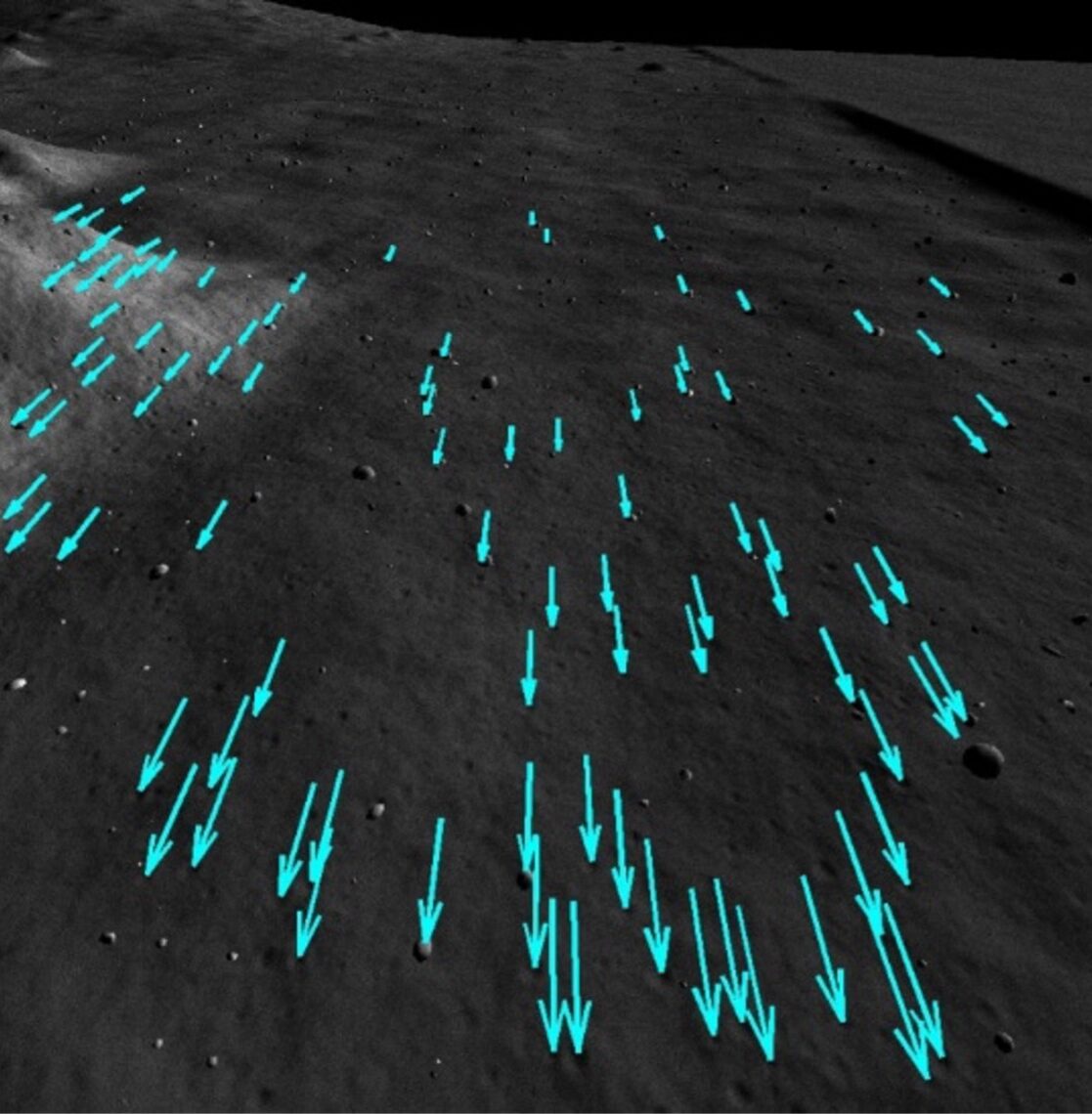

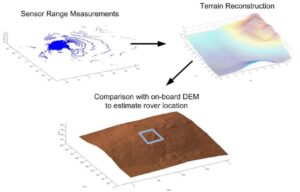

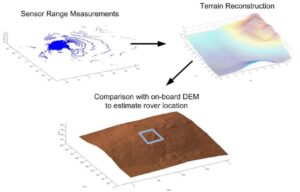

NGC also developed a complementary Lidar-based localisation system that reconstructs surrounding terrain topography and matches it to a reference Digital Elevation Map (DEM) of the area. This system was also field-demonstrated as part of the Artemis Jr development activities.

NGC is currently part of the Canadensys CSA Lunar Rover Mission (LRM) team. The team has been mandated by CSA to design a microrover concept which will explore a polar region of the Moon in 2026.

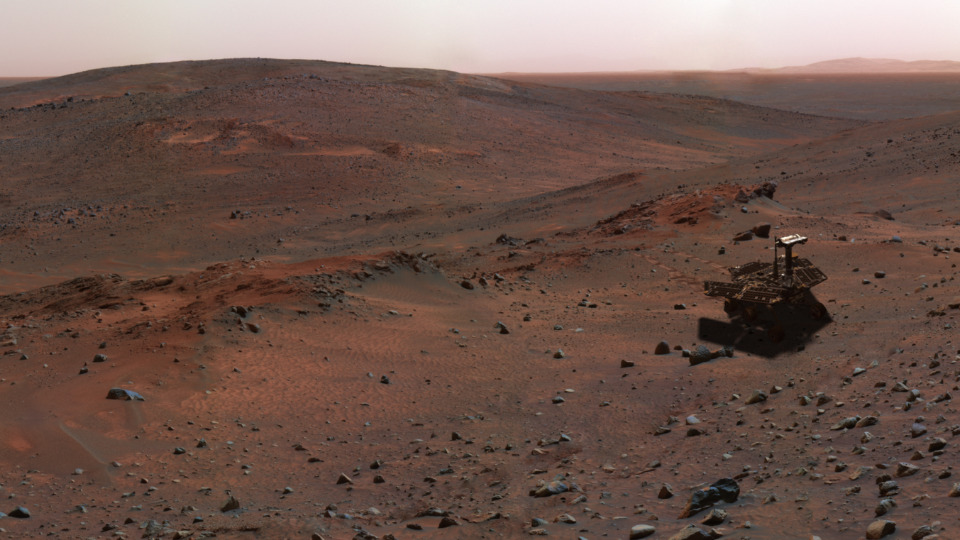

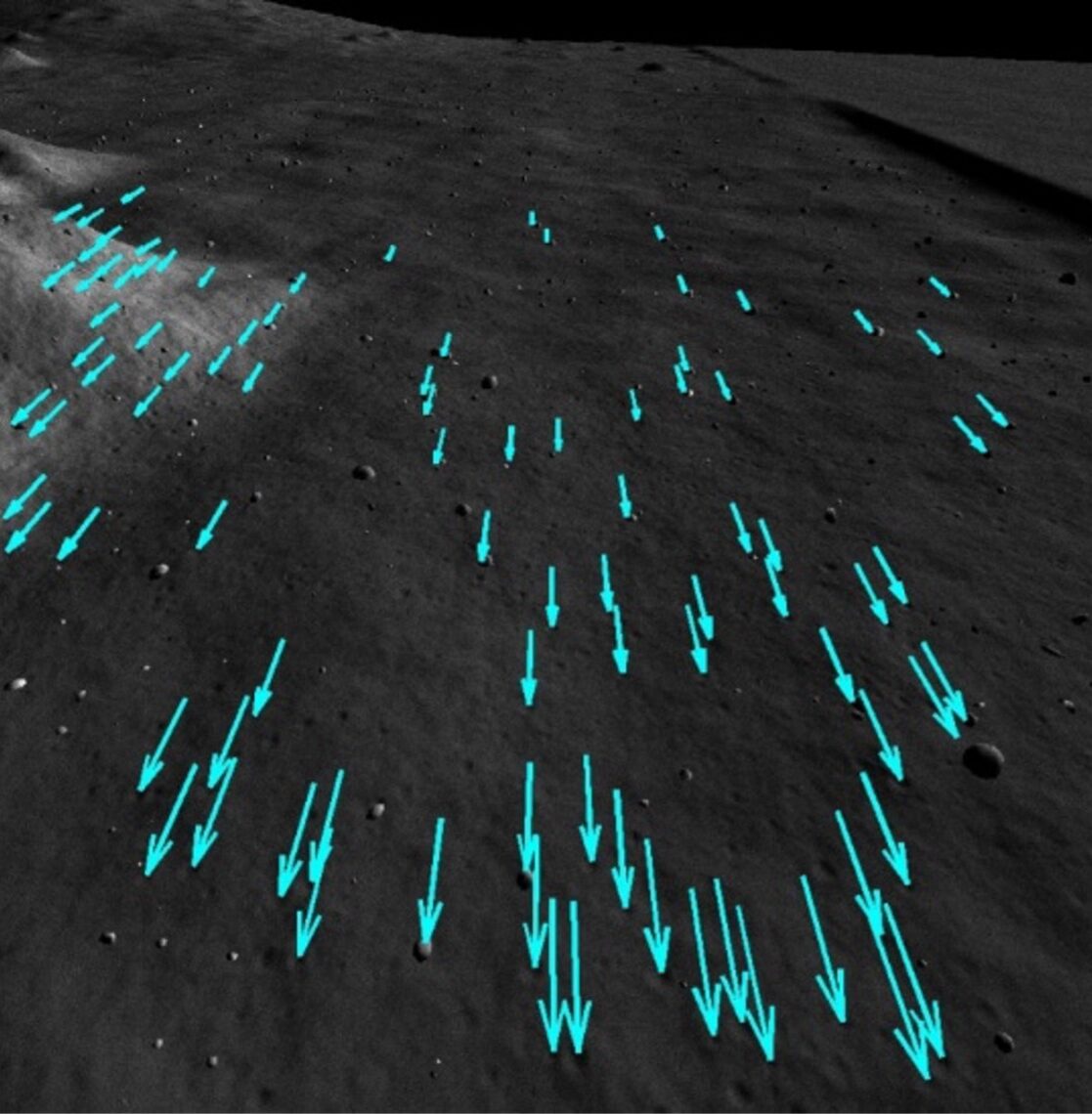

Rover Motion detection from image processing

Rover Absolute Position Determination System